Every day, more and more professionals use ChatGPT in their work. We agree, OpenAI’s chatbot can simplify many of the most tedious tasks. However, you need to be aware of one important aspect: if used carelessly, ChatGPT can put your and your customers’ data at risk.

What are the privacy risks?

In March 2023, ChatGPT was suspended in Italy because of privacy concerns. The Italian Data Protection Authority found that OpenAI didn’t clearly explain how it would use the data it collected. The company then solved the issue, providing a more detailed privacy policy.

When we talk about privacy risks related to ChatGPT, we refer to two main cases:

- The data that ChatGPT collects to train the algorithm.

- The risk of data breaches that may result from system failures. For example, in March 2023 ChatGPT was the victim of a data breach and the hackers managed to access the conversations and payment data of a group of clients.

Now let’s look at 3 practical tips to protect your data and limit the risks.

1. Limit the data sharing

In its privacy policy, OpenAI states that the data shared with ChatGPT is used to train the algorithm. However, in the UK, there’s the UK GDPR, which gives you the right to opt out of certain processing. This means that as a UK user, you can withdraw your consent and request that your data not be used for this reason.

Here’s how you can do it:

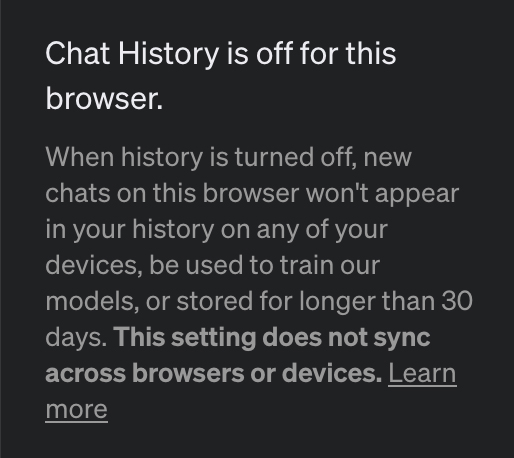

Disable the Chat History

After several warnings from the Data Protection Authorities, OpenAI has introduced the option to disable chat history. Go to your account settings, click on “Data controls” and uncheck the “Chat history & training” option. This way, the data you enter into ChatGPT conversations will not be used to train OpenAI’s models.

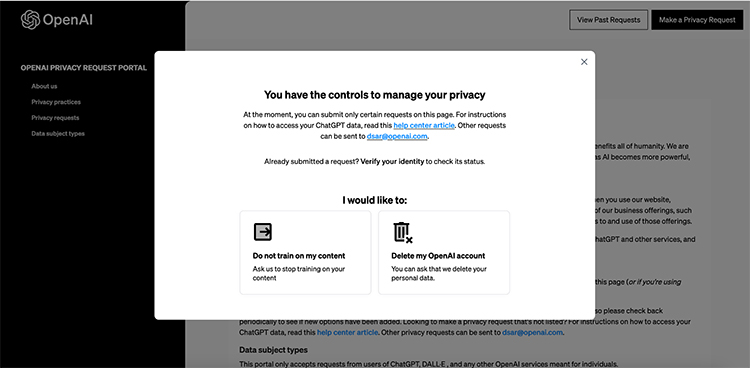

Send a request through the privacy portal

Another way of doing this is by sending a request through OpenAI’s privacy portal. You just need to click on “Make a privacy request”, select the kind of account you have, and then “Do not train on my content”.

In addition, OpenAI has recently released another paid plan – Team – that excludes the data from this type of processing by default.

As we have already mentioned, artificial intelligence tools can be vulnerable to cyber-attacks. Hackers can access your conversations on OpenAI servers and steal sensitive information.

That is why it is advisable to never share sensitive data on ChatGPT, such as phone numbers, email addresses, bank details, health data, etc., especially if it is your customers’ data. The theft of such information by cyber criminals can have serious consequences, both for you and your business.

3. Remember the best practices

In addition to these ChatGPT-specific tips, don’t forget to always follow some best practices when working with your customers’ data. So use a secure and private connection, choose hard-to-guess passwords and update them often, set up two-factor authentication. This way, you will greatly reduce your risk exposure.

If you have an online business, it’s also important to comply with the privacy laws that apply to you. Laws such as the UK GDPR may impose additional requirements. For example, you may need to manage users’ consent through a consent management platform, or to record their consent preferences with a specific log. Don’t overlook compliance – it could have a negative impact on your business!

Leave a Reply